Throughout the course of history, humankind has relentlessly pursued innovation and progress. We can see the echoes of advancement in everything from the mechanical marvels of the Industrial Revolution to the present digital era. The year 2023 saw an unprecedented new chapter – one that is shaped by the confluence of human creativity and machine intelligence. In this unfolding tale, Generative AI emerges as the protagonist, holding the potential to reshape the world. With Generative AI, we can create machines that are able to imagine and inspire, transforming every facet and function of modern-day enterprises.

For the uninitiated, Generative AI is a type of AI technology that can produce new, realistic forms of content – text, image, audio, video, software code, etc. – from the raw training data. Entering the Gartner Hype Cycle for Artificial Intelligence in 2020, it hit mainstream headlines in late 2022 with the launch of a chatbot named ChatGPT that was able to produce human-like responses.

In this article, we will take a look at how enterprises can integrate Generative AI into their systems through custom LLMs, MathCo’s proprietary framework to guide organizational Generative AI journeys, and how to best evaluate the right solution for your needs.

Check out our white paper for a deep dive into Generative AI, how it spurred the adoption of Large Language Models (LLMs), the business and technological landscape, and more.

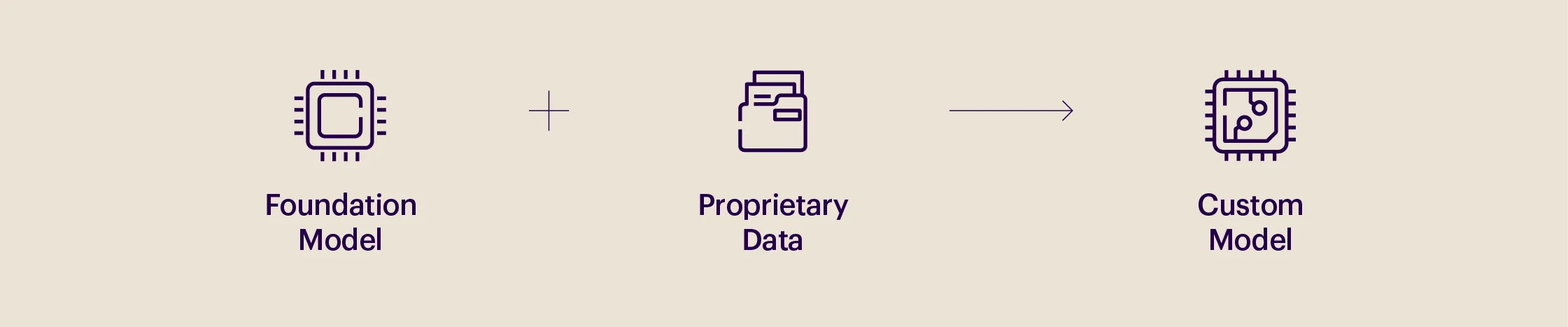

Custom LLMs: Leveraging open-source foundation models

As Generative AI continues to take center stage, global organizations are competing to tap into its transformative potential. LLMs and their ability to search, understand, and synthesize data have been widely accepted by organizations, and many of them are rushing to introduce natural language assistants into their business functions. But in reality, broad models like GPT-4 (foundation for ChatGPT) are simply trained on internet data. And they might not have the necessary business context to provide solutions for various enterprise use cases.

On the other hand, custom LLMs can be enhanced through context enrichment or training on enterprise data. Developed from open-source foundation models, these custom models are tailored for specific business requirements while ensuring greater control and data privacy. For instance, a CPG (Consumer Packaged Goods) company aiming to build a sales support chatbot can train the open-source foundation model with proprietary organizational data. It reduces the risk that comes with sharing sensitive data with third-party models while also giving greater control over the behavior and functionality of the different use cases that the LLM can power, such as the chatbot. The company can also further fine-tune the model to increase the accuracy and relevance of responses and maintain the desired tone and style.

The Generative AI Grid:

Addressing the challenge of effectively utilizing custom LLMs or Generative AI applications for enterprise use cases demands a strategic approach. Organizations must consider two fundamental aspects: the business context required to fine-tune the model (raw data, domain knowledge, market data, etc.) and the complexity of the action that needs to be performed.

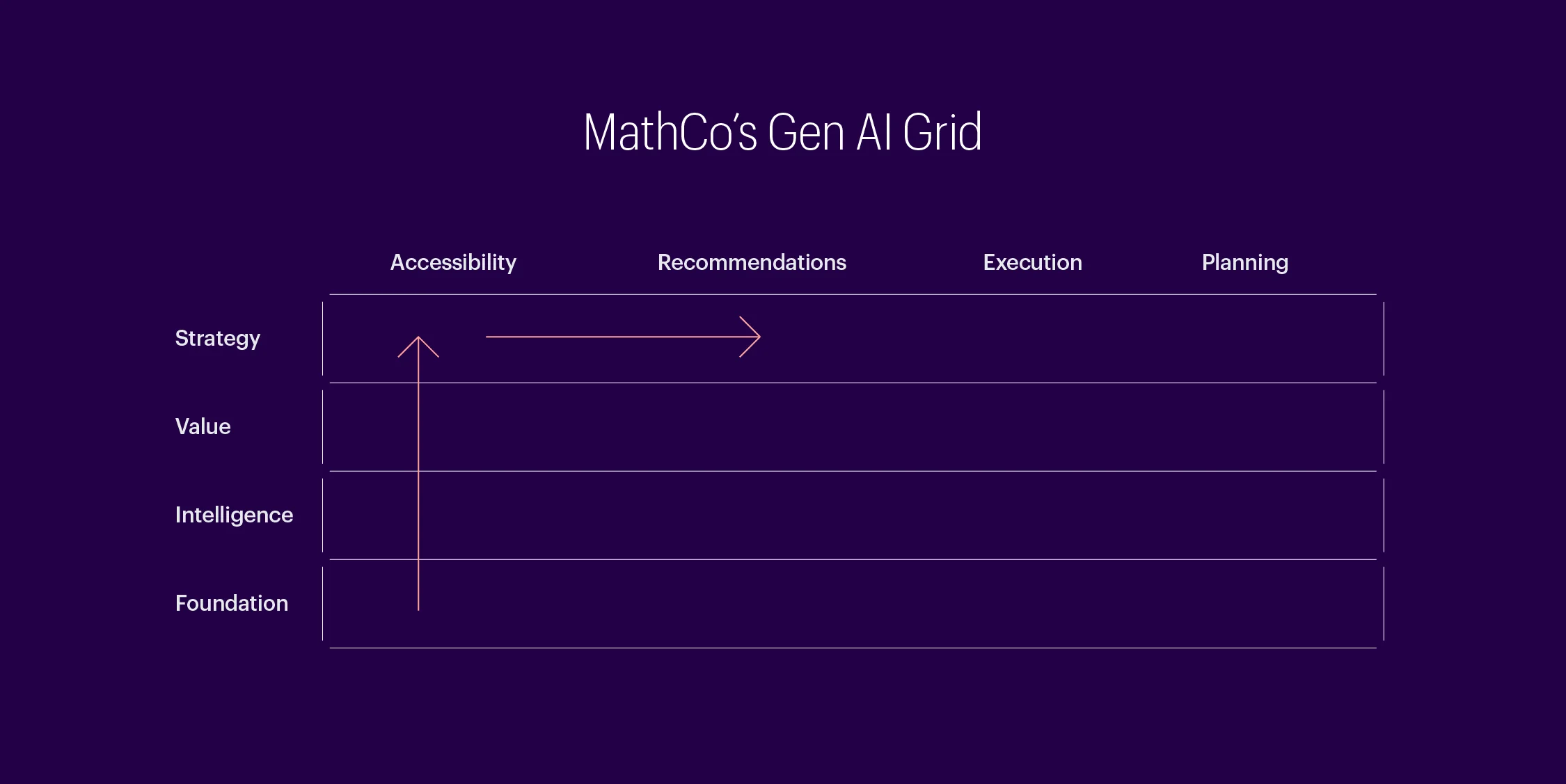

At MathCo, we have created a proprietary framework called the “Generative AI Grid” that will serve as a compass guiding organizations toward seamless Generative AI integration, amplifying both efficiency and innovation. It empowers enterprises to assess business context for specific use cases and understand the associated complexity, which will help determine the extent of customization required for the model, ranging from simple language correction to intricate content generation.

In the following section, let’s delve into the integral components of the Generative AI Grid.

The vertical axis:

The vertical axis of the Generative AI grid features four layers – Foundation, Intelligence, Value, and Strategy. As depicted above, the demand for business context increases once we move up the layers. Vertical integration ensures reusability across layers, while user personas accessing each layer are distinct and have unique business objectives. Assets across this axis will serve as input for more advanced LLMs, enriching them and making them even more contextual.

#1 Foundation Layer – The foundation layer deals with raw data and operational data, which might be structured, semi-structured, or unstructured. A data wrangler is typically accessing this layer.

#2 Intelligence Layer – As we progress upwards, we reach the intelligence layer. Assets here provide additional business context for training the model as it focuses on creating KPIs (Key Performance Indicators), insight generation, etc. Data scientists and analysts use foundational elements to create assets in this layer.

#3 Value Layer – Business users come into play with the value layer. It involves the availability or knowledge of workflows and existing solutions. The primary purpose of assets here is to make decisions and generate value for the organization.

#4 Strategy Layer – The strategy layer engages C-suite executives with a long-term outlook. Enabling access to all the assets in the layers below will unlock use cases that are more ambiguous and forward-looking.

The horizontal axis:

The horizontal axis of the Generative AI grid also comes with four levels– Accessibility, Recommendation, Execution, and Planning. The complexity of the use cases and the solutions will gradually intensify as we move horizontally across these levels. Feedback loops across vertical layers are critical for navigating the horizontal spectrum.

#1 Accessibility Level – The accessibility level features rudimentary use cases. And their solutions will involve basic tasks like discovering patterns, requesting information, etc.

#2 Recommendations Level – As the name suggests, use cases at this level require the model or solution to provide recommendations based on multiple inputs.

#3 Execution Level – As we progress to the execution level, the use cases start becoming complex and will require advanced solutions. Use cases at this level typically require solutions to work with systems to automate and execute.

#4 Planning Level – The planning level involves the highest degree of complexity. Use cases at this level will require solutions to create plans and even intervene as and when necessary.

Applying the Generative AI Grid: Evaluating business context and complexity

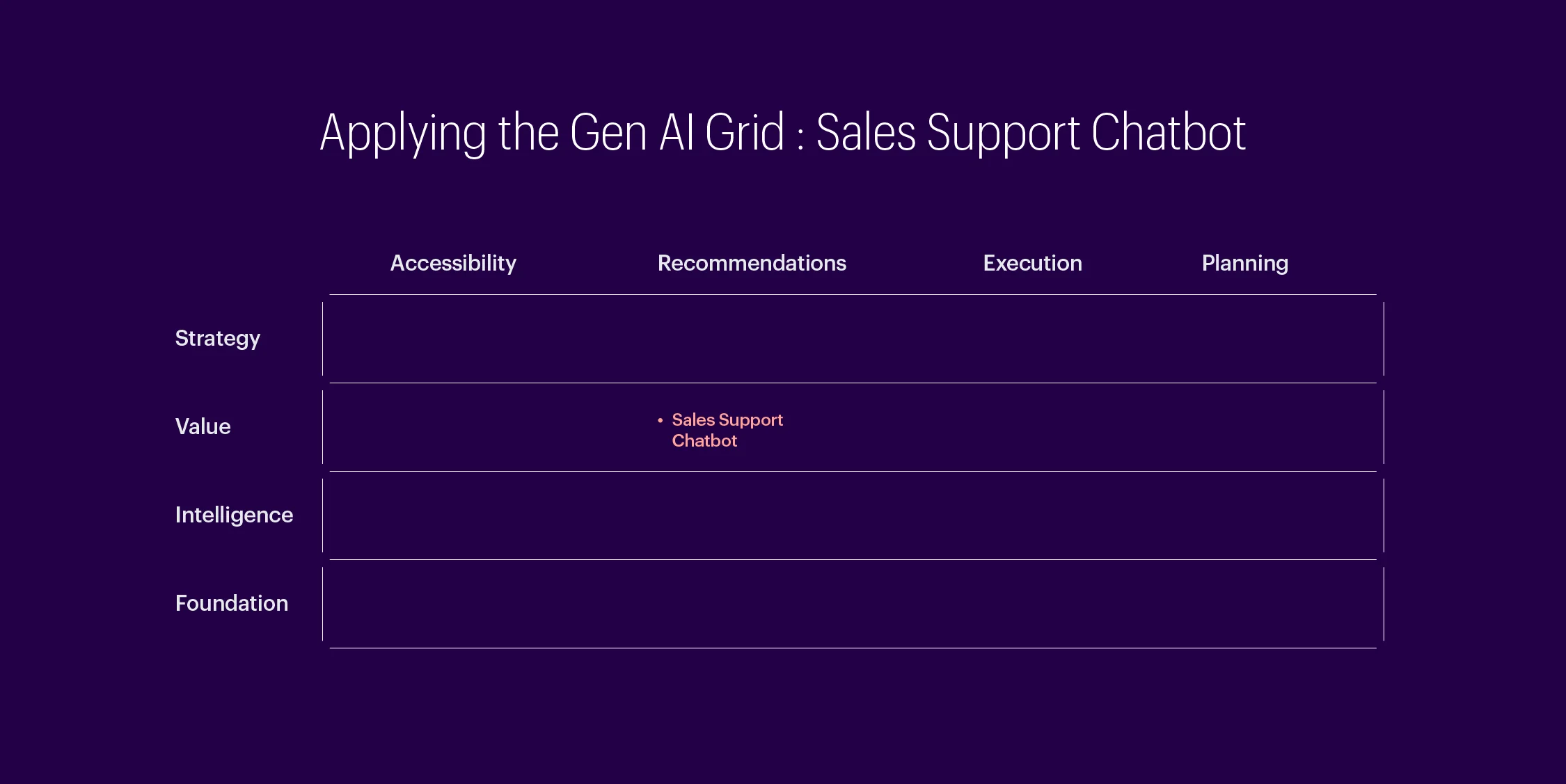

Now, let’s try to understand how we can use the Generative AI Grid. To do this, we can expand the previously mentioned use case in the custom LLMs section – that of a Sales Support Chatbot in a CPG company.

The primary goal of this chatbot is to assist senior sales executives by offering pertinent insights and recommendations during customer interactions. This necessitates a substantial amount of the business context to operate effectively, and the chatbot should generate suggestions by leveraging the business context fed into it.

Consequently, when we position this scenario on the Generative AI Grid, it sits at the intersection of the Value layer and Recommendations level.

Similarly, when we explore other use cases such as content generation, sentiment analysis, customer service automation, etc., the Generative AI Grid will serve as a valuable assessment framework. Placing each use case on the grid can give us a holistic view of the business context and the complexity of the action. This also provides a framework for understanding how to traverse the Generative AI landscape. Even though an overall roadmap should feature moonshot ideas, identifying realistic and easily achievable outcomes is essential. This approach prevents getting lost in the hype while keeping things real with various stakeholders in the enterprise.

Summary

A recent study found that conversational AI assistants increased productivity by 14% for customer service agents [1]. But then again, enhanced productivity is merely the starting point. Generative AI holds the key to unlocking a multitude of benefits, with the power to positively influence all processes, functions, and workflows within a global enterprise. As we continue refining and training these Generative AI applications with the relevant business context, we can establish a higher level of interconnectivity and eliminate siloes. This enables all stakeholders to analyze different data points across functions and layers, thereby generating valuable insights quickly.

Register for our workshop today and discover how we use our proprietary Generative AI grid for different use cases while exploring the potential of Generative AI. Gain insights into optimizing productivity, fostering innovation, and achieving a competitive edge in the modern business landscape. Reach out to us here.

Bibliography

1. Torres, Jennifer. “Revolution at the Help Desk: How Generative AI Supercharges Customer Service Productivity.” CMSWire.com, June 14, 2023. https://www.cmswire.com/contact-center/can-generative-ai-boost-productivity-attitude-of-customer-service-agents/