The concept of data as a strategic asset has been gaining momentum in recent years. Its importance stems from the invaluable insights it provides, enabling organizations and individuals to make informed choices and drive progress. Data is essential for informed decision-making, problem-solving, innovation, personalization, performance optimization, risk management, scientific advancements, and effective governance. By harnessing the power of data, organizations and individuals can navigate the complexities of the modern world and drive meaningful progress.

Most organizations are continuously striving to make rapid data-driven decisions—both strategic and operational—across multiple critical business units based on trustworthy data. Bad decisions can not only have negative implications internally but can also result in losing an important customer permanently. Therefore, organizations are increasingly adopting emerging technologies, like artificial intelligence (AI), machine learning (ML), Internet of Things (IoT), and cloud computing, both to revolutionize operations and to keep up with competitors.

With the ongoing data explosion, owing to IOT sensors, social media, web and mobile apps, etc., there is a stressing need for real-time and data-driven decision-making by leveraging streaming data analytics. Using streaming analytics, we can analyze multiple streams of data produced by a multitude of components (e.g., IoT devices, social media interactions, financial transactions, customer click-stream, etc.) to generate real-time insights and facilitate faster business decision-making.

This article showcases how we designed a platform-agnostic, real-time streaming analytics engine and how it processes live data feed from Twitter to perform real-time sentiment analysis of users.

Application design

Real-time systems are required to collect data from various sources and process them as they arrive, within a specified time interval, typically in the order of milli-, micro-, or even nanoseconds, and generate a response that delivers value.

Here are some of the key characteristics of real-time applications:

- Low Latency (extremely short processing durations)

- High Availability (fault-tolerant systems)

- Horizontal Scalability (dynamic addition of compute or storage servers based on need)

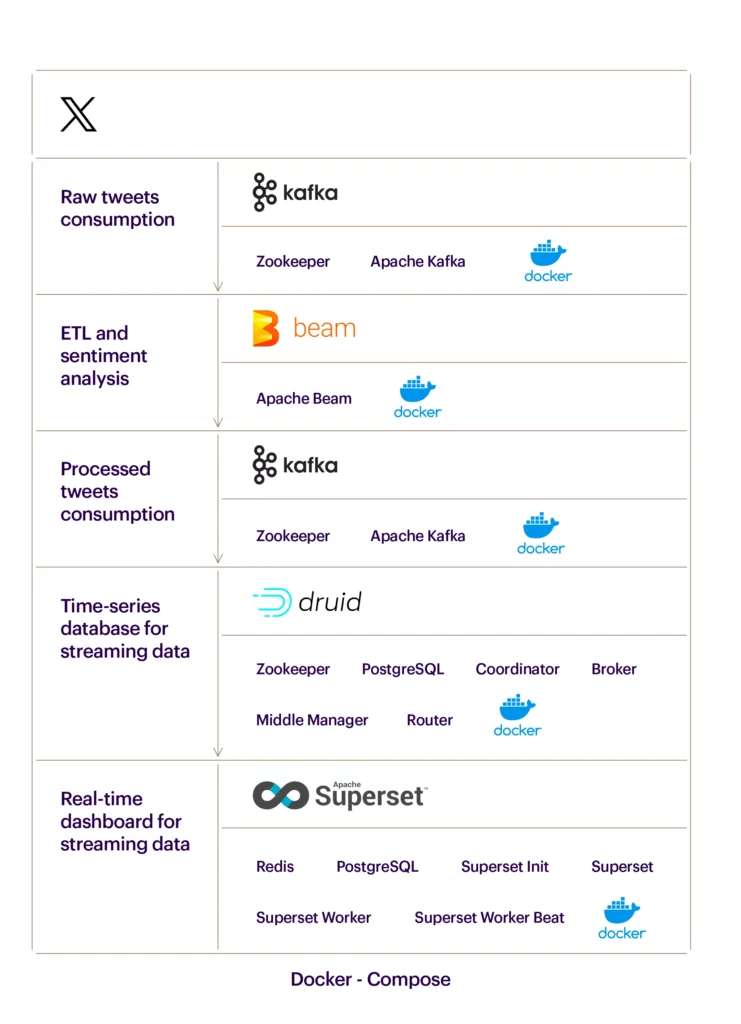

The above flowchart represents the overall streaming analytics process. Let’s look at each component in detail.

Data ingestion

The Twitter Developer API, along with the “tweepy [1]” Python library, is used to read live Twitter feeds and ingest them into Apache Kafka [2] queues.

Tweepy is a popular Python library that provides a convenient way to access and interact with the Twitter API. It simplifies the process of connecting to the Twitter API, retrieving tweets, posting tweets, and performing various other Twitter-related tasks, making it easier for developers to incorporate Twitter functionality into their Python applications.

Data transformation

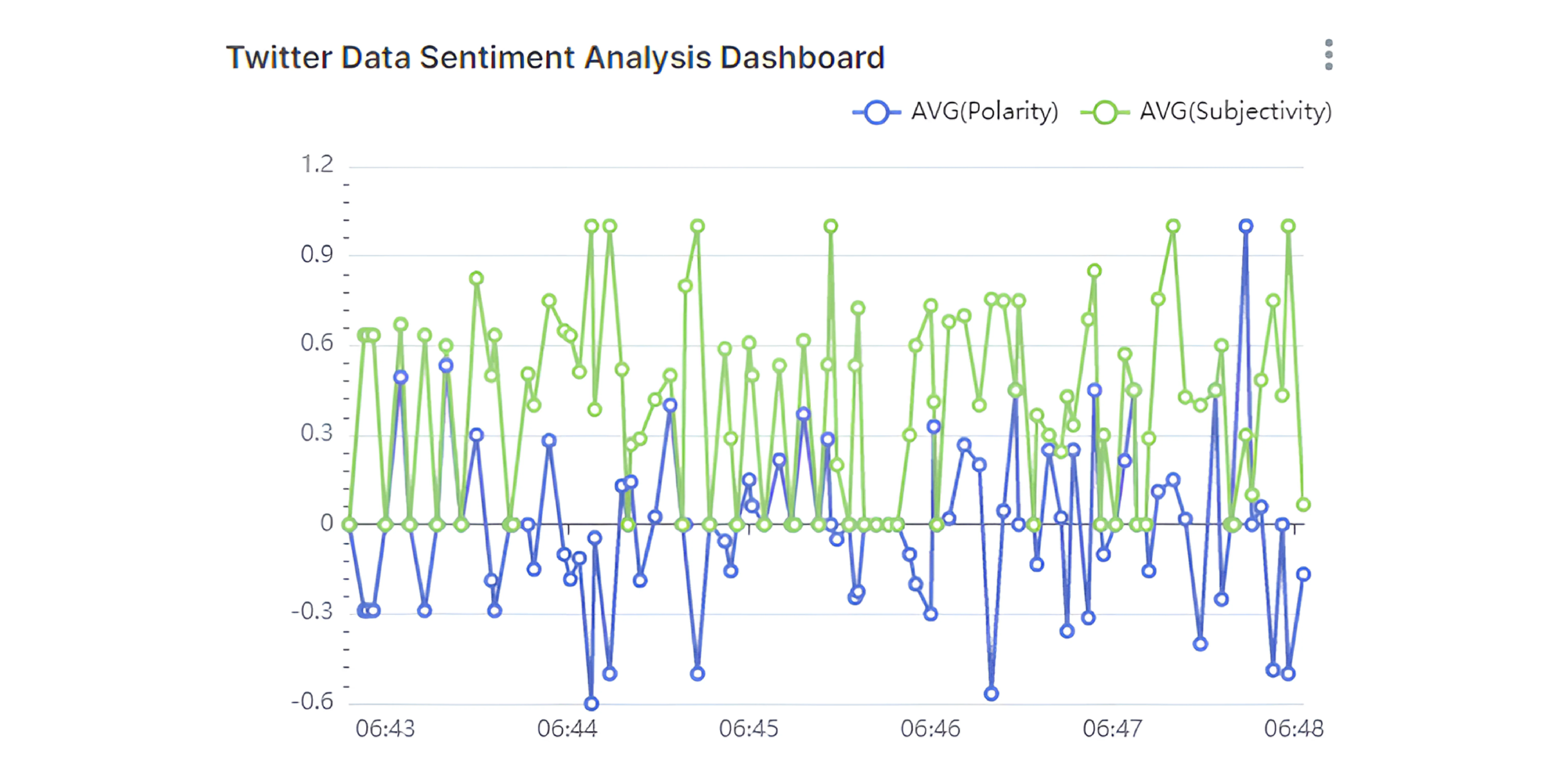

For sentiment analysis, “TextBlob [3]” Python library, which provides a simple API for common NLP tasks such as part-of-speech tagging, sentiment analysis, text classification, etc., is used. Text Blob’s intuitive API and wide range of NLP functionalities make it a popular choice for quick prototyping, educational purposes, and lightweight NLP tasks. Its integration with NLTK and availability of pre-trained models make it a convenient library for various text processing needs.

Apache Beam [4]–a unified model for both batch and streaming data-parallel processing pipelines—is being used to perform data transformations on the raw Twitter data, then pushed to the Kafka “outgoing” topic.

Data storage

Apache Druid [5]—a real-time analytics database designed for fast ad-hoc analytics and supporting high concurrency on large datasets—is being used to store the transformed streaming data from Apache Kafka.

Real-time dashboarding

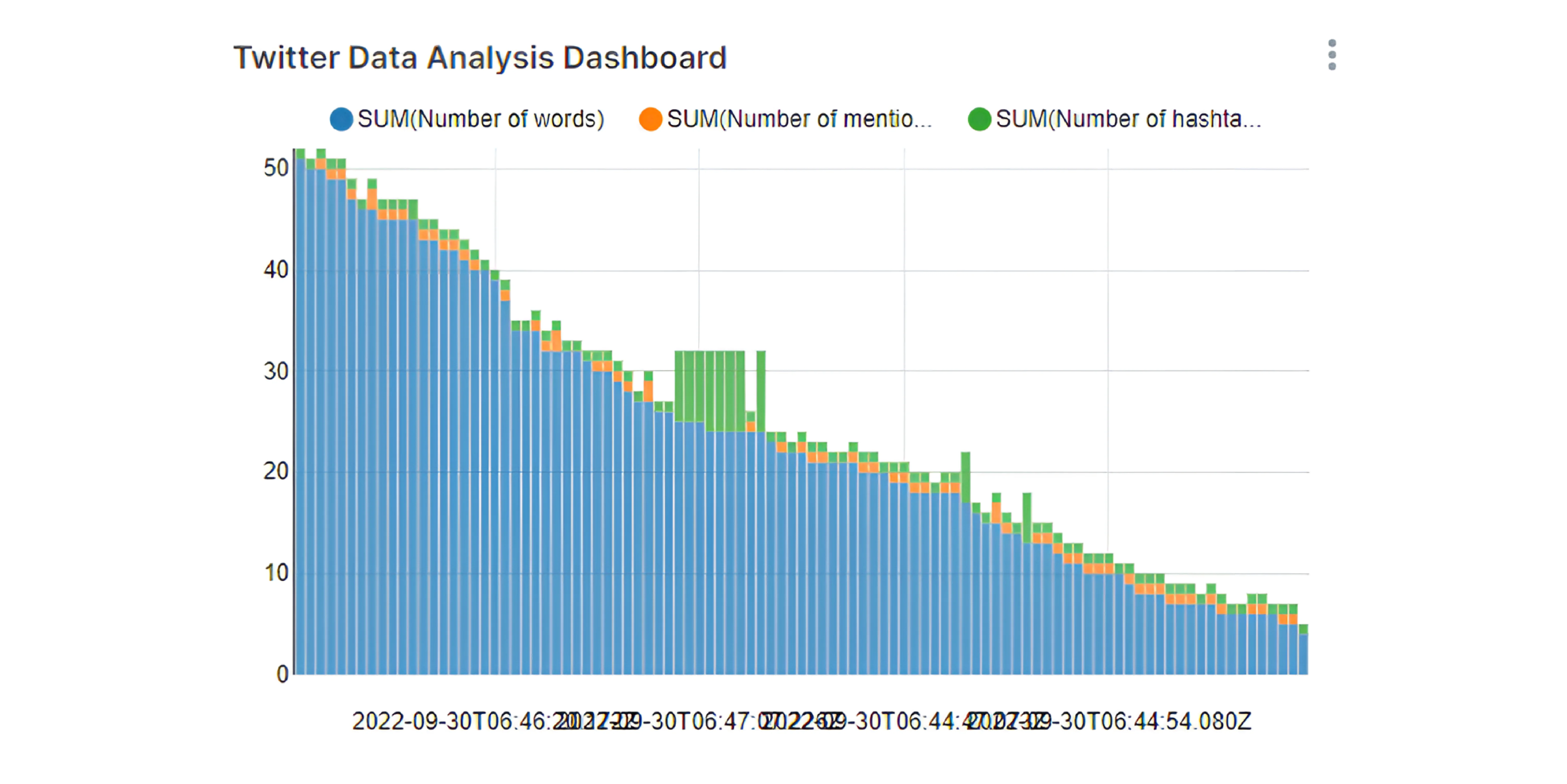

Apache Superset [6]—an open-source, highly scalable application for data exploration and data visualization—is being used as the dashboarding tool for data analysis on users’ sentiments (as shown in the figure below).

Application hosting

The working prototype of a real-time streaming analytics engine has been set up on individual Docker containers, which are hosted on a single virtual machine and orchestrated using Docker Compose.

Summary

Computational analysis of streaming data, such as a Twitter data feed, is a challenging task due to the unstructured and noisy nature of the tweets, which requires integrating the tweet stream processing with ETL tools and ML algorithms to transform and analyze the streaming data.

The framework showcased in this article can be leveraged for a wide variety of use cases, such as real-time recommendation engines, real-time stock trades, fault detection, monitoring and reporting on internal IT systems, real-time cybersecurity for enhanced threat mitigation, and many more.

Further research work in this stream is currently in progress, including integration with fine-tuned ML models for NLP and hosting the Docker containers in a Kubernetes cluster for scalability.

Check out our white paper to learn more about real-time streaming analytics and what it can achieve here.

References

1.“Tweepy/Tweepy: Twitter for Python!” GitHub. Accessed February 1, 2023. https://github.com/tweepy/tweepy.

2. Apache Kafka. Accessed February 1, 2023. https://kafka.apache.org/.

3. “Installation.” Installation – TextBlob 0.16.0 documentation. Accessed February 1, 2023. https://textblob.readthedocs.io/en/dev/install.html.

4.“The Unified Apache Beam Model.” Brand. Accessed February 1, 2023. https://beam.apache.org/.

5. “Apache® Druid.” Apache Druid. Accessed February 1, 2023. https://druid.apache.org/.

6. “Superset.” Welcome. Accessed February 1, 2023. https://superset.apache.org/.