GENESIS -The beginning

If one delves into the statistics of the success rates of projects, one realizes that a significant portion of global analytics projects fail. As Gartner predicts, 80% of analytics insights will fail to deliver business outcomes, through 2022. These are alarming numbers, and it is time that we address the elephant in the room i.e. how to derive tangible value (ROI) from analytics.

So, what is a data product, that we are obsessing about? There are many definitions, but at the heart, any Product Built on Data (that you sell) and Product that Generates Data (that you sell) can be termed as a data product. Would you call Facebook a data product, platform, or a service? The answer is a bit of all. Facebook itself is a social networking service, the feature which lets you create apps on Facebook qualifies to be a platform, and the recommendation engine would be a data product.

There are multiple ways to define and derive value from analytics. One of the best ways is to embrace analytics as an end-to-end solution (data product) which is secure, scalable, and readily available. In fact, I am going to go out on a limb here and say that “all analytics projects need to be data products”.

What should motivate companies to move towards data products?

For business:

– Monetization – Instead of limiting the scope of a data solution to addressing a particular analytical challenge, enterprises must assess the viability of internalizing the solution as a data product that can be re-used, scaled to their business ecosystems, and can also be sold to clients. Vodafone leveraged a location intelligence platform to build a mobile data business solution which was termed as Vodafone Analytics. It was initially deployed to act as an accessible interface to project the exponentially growing volumes of mobile data to offer location-based insights into Vodafone’s consumer base. The solution is now being fielded to analytically naïve enterprises to furnish location-specific insights into footfall traffic, demographics, visitor analysis, and beyond.

– Democratization – Enterprise-wide access to data will replace the strenuous task of raising an access request to the IT team and then awaiting their approval. The dissemination of information is enabled by a data product ensuring scalability, security, and quality assurance in the democratization process. The common bottleneck of translating and gatekeeping of data could be easily baked into the product itself to remove any inefficacies in the process. The traditional approach that was taken to achieve this was to share raw data in Excel format that could not be comprehended by professionals with limited data-related technical acumen. Data products can simplify this process and display data in the form of cognizable and relevant information. An ideal tool has features that allow stakeholders of different designations to filter the relevant data and get a visual of the same in a comprehensible way. Royal Bank of Scotland has a visual analytics software-enabled-net promoter system in place that enables data democratization through its intuitive interface to all the departments in the organization. For instance, the customer service department leverages this data in the form of customer feedback that helps the organization to draw actionable insights into bringing necessary changes in their customer orientation.

– Avert Business Risks and Identify New Opportunities – The application of data products is considered critical in aiding companies to prepare and tackle strategic business risks while unraveling new opportunities to improve operational efficiencies. Implementation of a data product in a business function automates the process to a significant extent, thereby minimizing the chances of human-led errors that can mean serious business implications for enterprises. For a retailer, a mistype of 10,000 SKUs to 10,000,000 SKUs based on a weekly demand forecast can result in serious financial loss. A data product that enables end-to-end automation of a process flow starting from issuing demand forecasts to relaying the same to the procurement tool can help the retailer avoid major business risk. Meanwhile, enterprise-wide data democratization made simple by data products will allow departments to recognize the snags in their operational procedure and develop ways to augment their operational efficiencies.

– Flexibility – Ideally, a data solution is supposed to adapt itself to the operational ecosystem of an enterprise rather than the other way around. This is where custom data products are finding relevance in the market. Custom AI asset, or what we call contextual AI, is an example of custom data products that can be aligned to enterprises’ operational ecosystem to tackle data-related challenges and give an impetus to an enterprise-wide analytical transformation, across data extraction, feature engineering, algorithm development, performance monitoring, among others – contextualized to every little detail.

For an operational boost:

– Scale – Building and executing a data analytics solution for every individual challenge will entail the necessity of having in place the appropriate network infrastructure and adequate security measures. For instance, security measures and infrastructure to drive consumption of a data analytics solution in a particular department will be different from that of enterprise-wide consumption. However, a one-time investment of time and resources in a data product will save enterprises from the hassle of reconfiguring their network and security infrastructure as a data product can be scaled and reused across different levels of business ecosystems.

– Security – Democratization of data may come at the cost of data security. A database when shared in an Excel format can be easily accessed and unethically shared by any individual in an enterprise. Basic measures will have to be set in place to ensure that only required parties are granted access to the data. For instance, a retailer with multiple distributors and category managers across geographies can limit data access to distributors to only the geographies in which they operate. This will necessitate taking security measures at different levels such as ensuring region-wise data security, role-wise data security, and geography-wise data storage measures which entail huge expenditure. However, a centralized data product allows the data gatekeeper to exercise strict control and avert any risk of customer information leakage.

– Time – Data products that enable automatic data preparation remove the hassles of manually cleaning, preparing, and analyzing raw data without writing any code. This frees up time as well as money invested in manual processes, thereby reducing the turnaround time for insight generation.

– Integration – Analytical insights translate to value only when these are processed into meaningful actions. This means that any insight offered by a data solution has to be integrated into a downstream application to initiate an action that can bring business value. For example, in the case of a retail supply chain, no matter how much accuracy is perceived about an SKU quantity forecast, if the same is not fed into the procurement application, the forecast is stripped off its value. Implementing a data product automates the process of feeding the analysis into downstream applications to derive tangible value. Going back to the example, any change in the forecast in the data product interface will trigger an automatic conveyance of the change to the procurement tool. In a similar fashion, data product automates upstream integration of an application. For instance, typically a specific data model is used to integrate specific sources among multiple data sources to draw an analysis of customer behavior. Data products can help automate the process of integrating specific data sources. This will ease the hassle of repeating the same process of integrating data sources and analytical models for future projects based on customer behavior analysis.

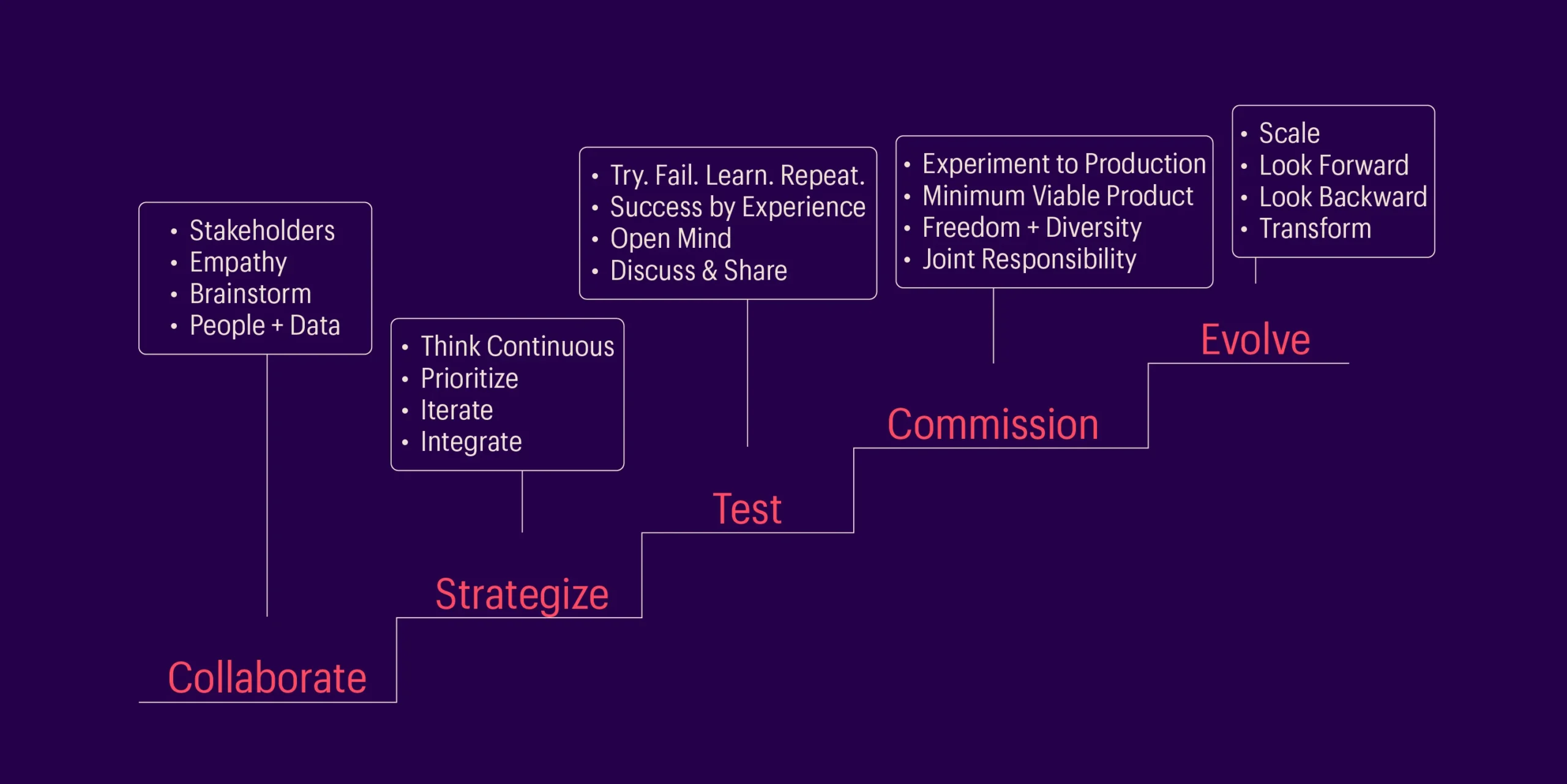

To drive the success of developing or internalizing data products, an enterprise needs to have an appropriate framework to start with. They have to be agile enough to learn, adapt, and customize the framework to enable enterprise-wide transformational change. Over a period, this is internalized and starts to reflect in their business DNA. Let’s look at the stepping stones to create an ideal framework that teams can adopt:

Collaborate: Identify and work with key stakeholders. Build an in-depth understanding of business requirements and complement with contextual suggestions, thereby creating a forum to collaborate.

Strategize: Continuous thinking. Instead of taking the traditional approach with projects that involve fixed value and end date, embrace a modern approach at organizing around continuous product-like workstreams that iterate and evolve according to the value delivered.

Test: Try-Fail-Learn-Repeat-Repeat-Repeat. Testing is about trying out different options and finding what works and what doesn’t. Continuous phases of tests hone experience, a pillar of success for enterprises in the market.

Commission: The true value of data scientists is in not only having the ability to build great ML models that answer real business needs but also to create scalable and deployable analytical solutions. They need to either collaborate with engineers or be full-stack data scientists to enable production-grade data apps.

Evolve: Experts in firms ought to scale their products and keep vying for continuous improvement. Iterate by focusing on what will increase success along with feedback on what are the learnings. One needs to go from changing processes to changing mindsets, growing beyond the scope of data science, to unfold a truly transformational effect.

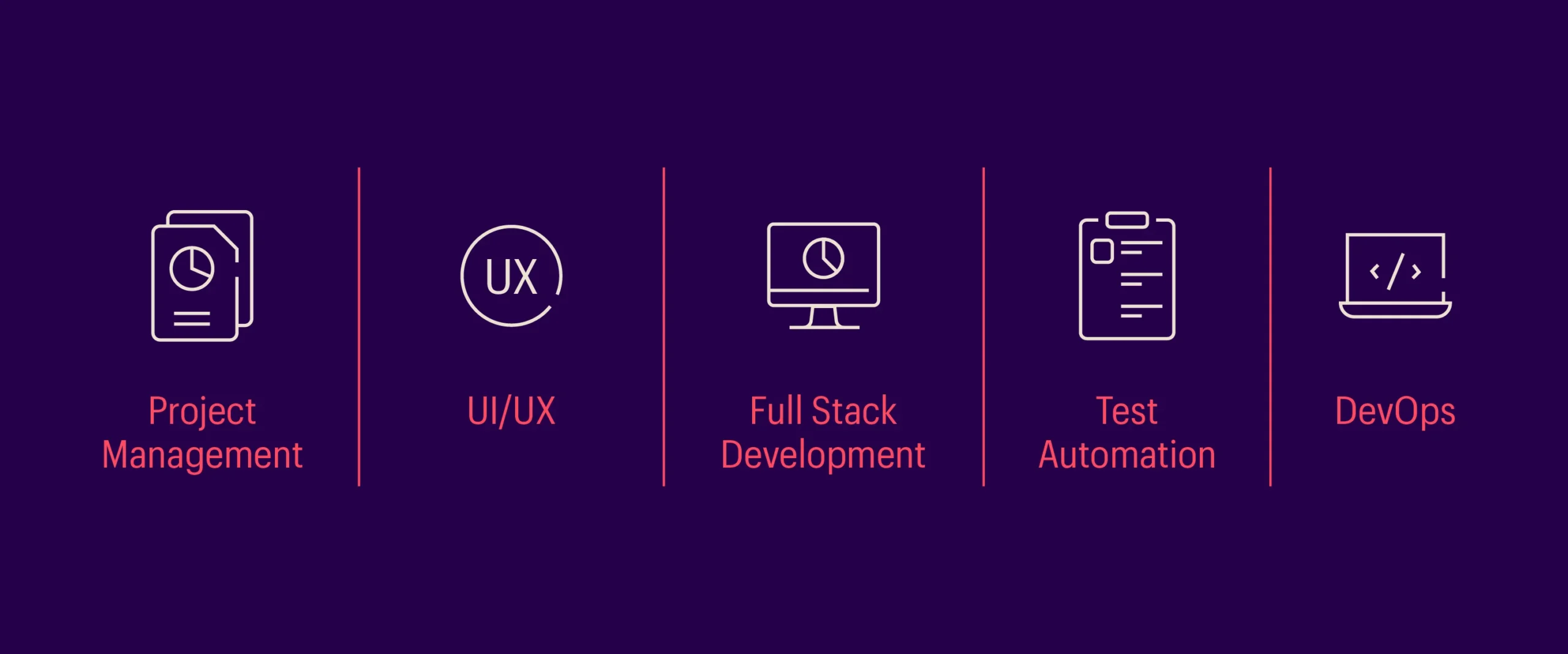

Building a data product is like cooking a fine dish, you need the right ingredients at the right time. The various functions that need to collaborate to develop an end-to-end analytics data product are:

The development phases of a data product are akin to that of general products. The thought process that goes behind any product development is identifying opportunities to cater to customers’ needs, build a prototype based on customers’ needs, and then evaluate and re-evaluate its functionality. However, moving away from the generic product development arena, the data component adds to the development complexity. In this context, it is a recommended practice for firms to preach and practice cross-functional collaboration, contemplate, and prioritize long-term data product opportunities but start with a less complicated approach.

Product management — the glue that sticks cross-functional teams to achieve product success.

Full stack development — designs the product with a view of user interaction & experience.

Test automation — automated testing to ensure quality control and assurance.

DevOps — plan for operationalization before jumping into development.

TERMINUS — The result

Toeing the line of quality standards that help enterprises rise above the noisy supply market arena, MathCo has leveraged its data product portfolio that helped spin success stories for many Fortune 500 companies. Here is one such example:

How MathCo deployed a data product to help a premium shoe manufacturer boost its brand outreach:

A US-based premium shoe manufacturer wanted to leverage a tool that could measure their marketing campaign efficacy. The existing decentralized decision-making process of the client added a layer of complexity in the process of identifying campaign events that were generating high ROI.

Solution summary:

MathCo successfully developed and deployed an impact measurement tool that could seamlessly accept input data, analyze the impact of experimental changes done in the campaign real-time, and offer actionable insights to the client to optimize their marketing campaign investments.

Tool architecture framework:

- A presentation layer that featured user access control and enabled visualization of input and output data.

- The application layer in the form of an interface connecting the user interface to backend applications.

- A model runner to process statistical models which were designed to determine the impact of campaign events. The models had the flexibility to be altered whenever deemed necessary.

- A data layer that is composed of input data and can store results. Measurement of KPIs and an optimum level of data granularity had to be identified prior to using this tool. It was followed by defining parameters such as geography, time, among others for events whose impact had to be measured. Filters were created for these parameters to determine their individual impact that triggered the events.

Impact:

- Developed impact measurement framework to determine real-time impact of over 100 experiments done across marketing, pricing, and CRM functions

- Usage of a serverless platform in the tool reduced the overall expenditure of impact measurement.

- Accuracy of forecasts furnished by the tool at multiple levels enabled efficient sales planning and management by the revenue management team.

To know more about the solution in detail and the sustainable impact it had on the confectionery brand’s operation, read this case study.

EVOLUTION — The growth

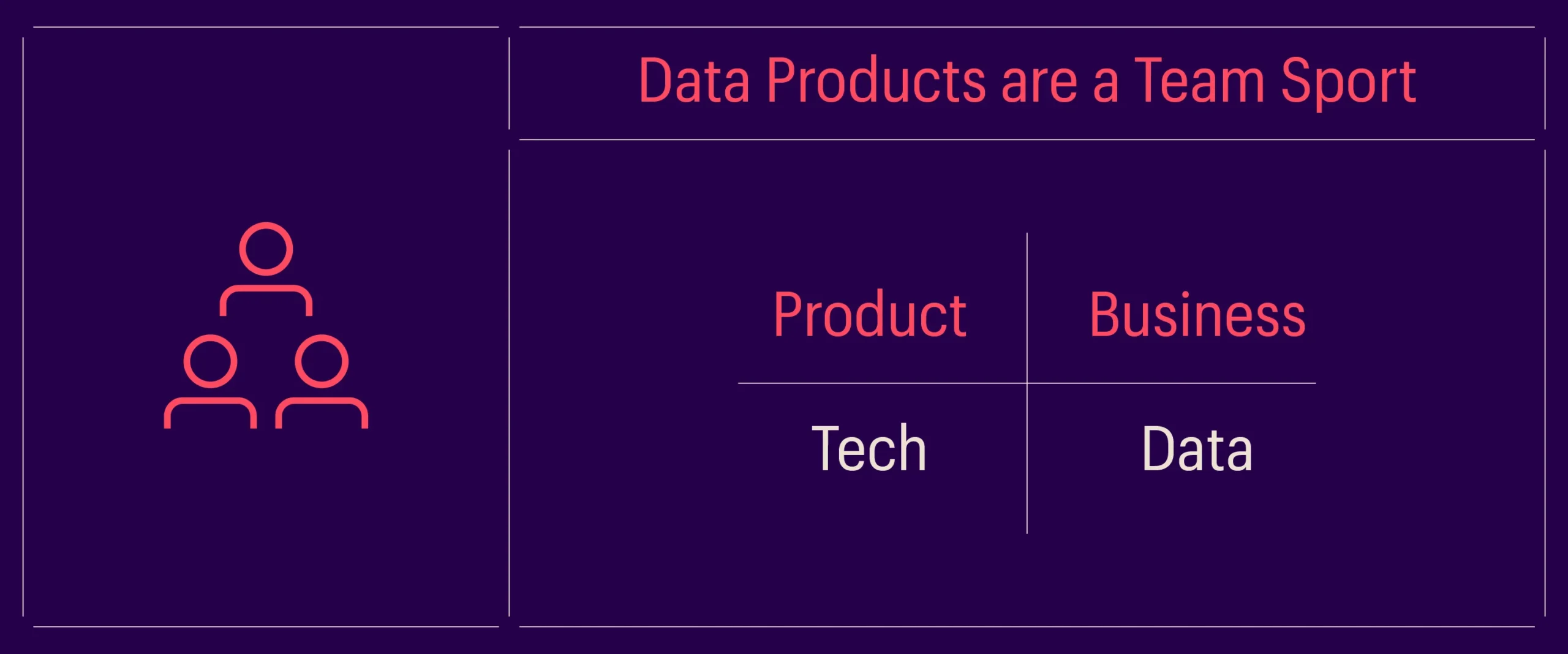

From a business perspective, decision-makers in product firms play vital roles in creating and ensuring smooth execution of the process funnel of data products. Considering business and technology teams as the major decision-makers; the presence of both the teams in discussions around feasibility of executing newer product initiatives is crucial. While the business team can project market opportunities for such initiatives, the tech team can confirm the feasibility of executing initiatives. This makes it obvious that close collaboration between these domain experts can translate into fruitful identification and materialization of data product opportunities.

Data scientists must have a clear understanding of clients’ business needs which is generally perceived to be pertinent for business executives. An in-depth understanding of client needs will offer a definitive direction to data scientists while essaying their role in the project.Meanwhile, data scientists must play their part in the enterprise-wide democratization of data. Their parts include opening access to raw data and everything related to data models across all the stages of a projectHowever, democratizing data to individuals who are analytically naïve may not always be useful and even pose a threat to customer confidentiality. For instance, any incorrect execution of processes on the open database can leak sensitive client information. To avert such risks, enterprises must promote data literacy across all the teams.Including data science teams in discussions related to product and business strategies will give a significant boost to the necessary cross-team collaboration required in an enterprise and offer an impetus to data product development.

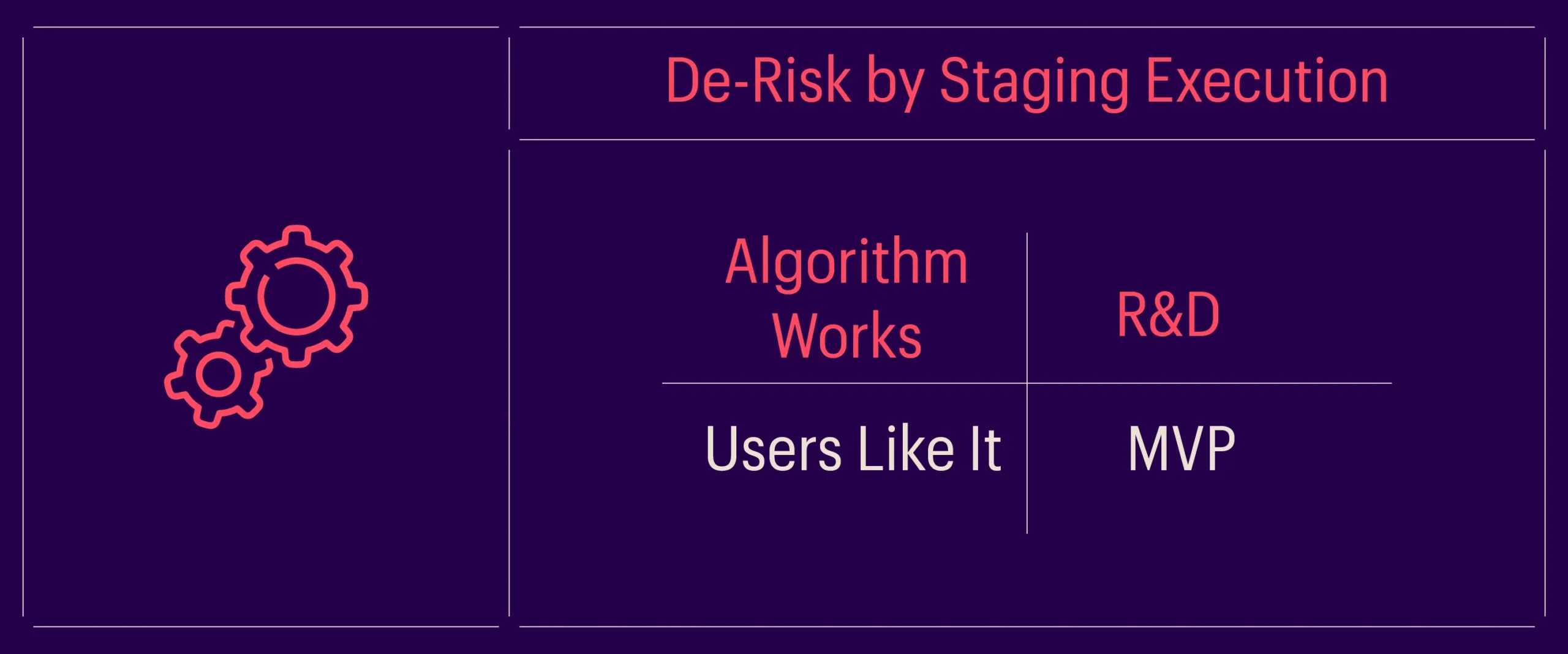

Data products are the results of a scientific combination of data and algorithms. While this sounds quite straightforward, getting to this stage is an uphill task that involves reaching a common ground between the business viability of data product concepts and the R&D efforts required. Over-investing in R&D initiatives before concluding on business prospects of a conceptual data product will ramify into severe financial losses and vice versa. Risks can include release of a minimum viable product supported by an inadequate data model which is bound to receive poor acceptance among users. To reach a ground that supports the release of a technologically superior data product with maximum business prospects, some of the MVP approaches are:

Lightweight models — Start small, ship faster, and build upon over time

New data sources — Find innovative methods to acquire data

Scope box to MVP — Reduce the scope of challenges, build and launch an MVP

No big bang — Start with manual to automated overtime to scale up

The upside down — Engineering and data science run together

Opportunities for data products are ample. But sans an effective business and technological approach, a majority of enterprises fail in their costly attempts of mobilizing a data product dream. Seamless collaboration between stakeholders ranging from business leaders to data scientists, investments without losing focus of future business prospects, and a simple approach to start, are some of the basic parameters that must be met to accelerate data product development that can add value to businesses.

Some pearls of wisdom:

- Involve business in sprints

- Full stack data scientist

- Say no to Gantt charts and embrace agile methodology

- Collaborate amongst different & relevant folks

- Ownership lies with the team