Understanding the need for Disaster Recovery

According to the Uptime Institute’s Annual Outage Analysis 2021 report, 40% of company outages or service interruptions cost between $100,000 and $1 million, with approximately 17% costing more than $1 million [1]. Therefore, for operators of mission-critical systems, recovering data from sudden outages and data breaches through effective recovery measures and backup plans remains a priority.

Disaster Recovery (DR) is an implementation strategy involving a set of tools, policies, and procedures that enable the recovery or restoration of critical technology infrastructure and systems in the aftermath of natural or human-made disasters. It helps organizations regain access and functionality to IT infrastructure following events that can disrupt business continuity.

However, a process combining DR and business analytics is complex, tedious, and exorbitant, and it will take years to implement, depending on its complexity. The DR process requires a comprehensive analysis of geographically separated secondary data centers, techniques, and different DR solutions. It also involves building a backup plan to ensure business continuity in case of disasters/failures. To navigate these issues surrounding DR, emerging big data technologies are being considered as potential problem solvers. For instance, when different virtual machine (VM) instances are clubbed together, they form a single cluster possessing better availability and auto-scaling capabilities to meet DR requirements. Services such as BigQuery can meet disaster recovery constraints without requiring any additional effort, whereas resources such as cloud storage-based applications require extra effort for implementation.

A highly resilient and efficient cloud data warehouse alternative is Google BigQuery, a serverless and scalable data warehouse that has an inbuilt query engine to execute SQL queries in terabytes of data. BigQuery’s high throughput and better execution time make it an excellent candidate for executing terabytes of data in mere seconds. Many organizations have now adopted it to achieve enhanced performance levels without creating or rebuilding indexes, shifting their focus to data-driven decision-making applications and the seamless collection and storage of data beyond siloes. For instance, teams are now using BigQuery to perform interactive ad-hoc queries of read-only datasets. It enables data engineers and analysts to directly build and operationalize business models on PlanetScale, Azure Cosmos DB, or static backend databases with structured or semi-structured data using simple SQL in a fraction of time. All this is heralding a new era of accelerated innovation, making improved agility and scalability possible.

Creating preemptive data backups through Google BigQuery

BigQuery has the advantages of Dremel and Google’s own distributed systems to process and store large-scale datasets in column format. BigQuery also incorporates a parallel execution process across multiple VM instances using a tree architecture and scans the data table by executing SQL queries, thereby providing insightful outcomes in milliseconds. It can even process tables with millions of rows without slashing its execution time. Compared to conventional, on-premises tools that take longer times, BigQuery provides responses within moments. Businesses are leveraging this increased efficiency to enact faster decisions, develop visualized reports using aggregated data, and obtain precise results with high accuracy. Recently, a major social networking site democratized its data analysis process using BigQuery, utilizing a few widely used data tables across different teams, including Finance, Marketing, and Engineering [2]. This site identified Google BigQuery as the most effective tool alongside Data Studio in democratizing data analysis and visualization. In addition, the querying in BigQuery was observed to be easy and performant.

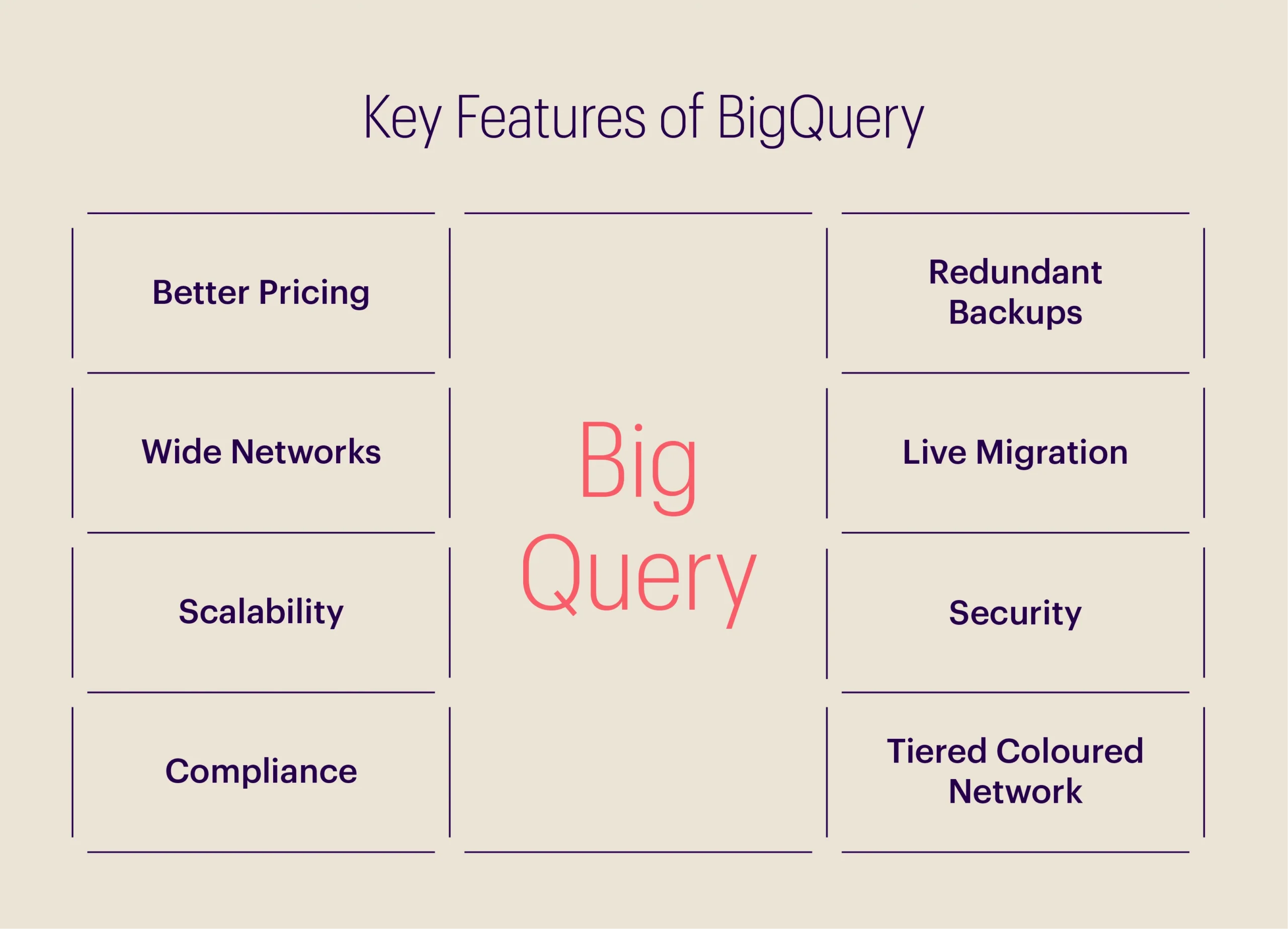

The features of Google BigQuery for disaster recovery are illustrated in the figure below.These features make Google BigQuery a better option for disaster recovery than similar applications in the market.

In general, disasters can be at a zonal or regional level. In the former scenario, the data will not be lost since BigQuery, by default, maintains copies of the data across different zones within a region. However, in the latter case, there can be a potential loss of data due to the unavailability of a replicated copy across different regions by default. To handle such challenges efficiently, copies of the dataset must be maintained in a different region. To do this, the BigQuery Data Transfer Service can be used to schedule the copy task.

Business use cases of Google BigQuery in Disaster Recovery

Disaster recovery is an integral part of business continuity planning (BCP), which is further defined using two concepts: a Recovery Time Objective (RTO) and a Recovery Point Objective (RPO) [3].

- An RTO is a part of the service level agreement (SLA) that has a maximum acceptable time for the application to be in the offline mode.

- An RPO has a maximum acceptable time for which the data might be lost due to a sudden system failure.

Generally, the smaller the values of RTO and RPO (which define how fast the application can recover from a failure or disruption), the higher the execution cost.

A use case for DR: Real-time analytics

In real-time analytics processes, data streams are continuously ingested from endpoint logs into BigQuery. For systems lacking BigQuery’s disaster recovery capability, ensuring seamless data processing and protection for an entire region would require continuous replication of data and providing slots in a different region. Nonetheless, assuming that the system is resilient to data adversity due to the implementation of Pub/Sub and Dataflow in the ingestion path, such a high level of storage redundancy results in a clear cost disadvantage. However, in a DR-enabled system, users only have to configure BigQuery data at a location of their choice, say zone A. From there, the data is exported to cloud storage under the archive storage class to zone B. In the event of a machine-level disruption, BigQuery continues to execute only within a few milliseconds of delay, with currently running queries continuing to be processed to support teams’ real-time analytics needs. In case of regional catastrophes resulting in data loss in one of the zones, DR allows users to create an exhaustive backup from storage in the other zone. Additionally, BigQuery also allows users to further strengthen their data recovery strategy by creating cross-region replicas of their datasets.

Mitigating data losses for the future

BigQuery is an excellent tool for storing and exploring granular data, offering advantages such as transparency in terms of cost, seamless and effective integration with other components, and better reliability and scalability compared to its competitors. Unlike conventional techniques, BigQuery does not require any additional configuration. It is predominantly adopted in most analytics projects to handle large-scale data by running simple queries. Considering the challenges businesses face in today’s times, with the increase in data volume and shortcomings of traditional data warehousing, a huge volume of data can be processed in the background to meet requirements using Google BigQuery. It also presents itself as an effective choice if database resources are limited.

With the increase in the centralization of data in IT systems and the growing significance of cloud services, a significant number of database management systems are being outsourced to different cloud service providers. The outsourcing of an entire database to an external provider requires a scalable processing system to access and process all the information from the database. For businesses looking to achieve this on a single platform, Google BigQuery offers a comprehensive, secure, and multifunctional solution to process large-scale outsourced data at significant speed in contrast to other cloud service platforms. Looking ahead, it seems clear that most data analytics and ICT organizations will shift their focus from traditional DBMS to Google BigQuery without having to worry about the significant costs associated with data processing and maintenance of complex infrastructure.

Bibliography

[1] Sullivan, Erin, Paul Crocetti, and Ivy Wigmore. “What Is Disaster Recovery (DR)?” Disaster Recovery, May 26, 2021. https://www.techtarget.com/searchdisasterrecovery/definition/disaster-recovery#:~:text=The%20Uptime%20Institute’s%20Annual%20Outage,cost%20more%20than%20%241%20million

[2] Wagle, Prasad. “Democratizing Data Analysis with Google BigQuery.” Twitter, July 8, 2019. https://blog.twitter.com/engineering/en_us/topics/infrastructure/2019/democratizing-data-analysis-with-google-bigquery

[3] “Disaster Recovery Planning Guide | Cloud Architecture Center | Google Cloud.” Google, n.d. https://cloud.google.com/architecture/dr-scenarios-planning-guide